In this blog post, I'm going to show you how to install and run an LLM in a web application using the Transformers.js library. The following demo application is written with Ionic/Angular, but Transformers.js is framework-agnostic and can be used with any JavaScript framework or just plain JavaScript.

Transformers.js, under the hood, uses WebAssembly, WebGPU, and the ONNX runtime to run the model in JavaScript.

ONNX is an open format and runtime for machine learning models. You can convert models from many different machine learning frameworks to the ONNX format and run them wherever an ONNX runtime is available.

WebGPU is an API for GPU acceleration in the browser. It is a successor to WebGL and is designed to be more performant and easier to use. You can check caniuse.com to see what browsers support WebGPU.

Transformers.js is modeled after the popular Transformers library for Python. So, if you are familiar with Transformers in Python, you will feel right at home with Transformers.js.

Demo ¶

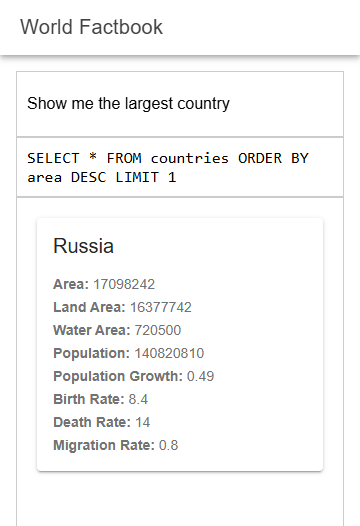

The demo application is a simple Ionic/Angular application that shows information about countries. The user can enter a natural language question or prompt. The application then utilizes an LLM to generate a SQL SELECT statement from this question and executes the statement on a SQLite database. The result of the query is then displayed in a list.

The application uses the @sqlite.org/sqlite-wasm library to run the database in the browser. The library is the official WebAssembly port of SQLite, allowing you to open and query SQLite databases in the browser.

The country data comes from the factbook.json project. The database is created with a Go program that reads the data from factbook.json, generates a SQLite database with the following schema, and imports the data into the database.

CREATE TABLE countries (

id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL,

name TEXT NOT NULL,

area INTEGER,

area_land INTEGER,

area_water INTEGER,

population INTEGER,

population_growth REAL,

birth_rate REAL,

death_rate REAL,

migration_rate REAL,

flag_description TEXT

)

After the Go program successfully imported the data, it writes the SQLite database to the file countries.sqlite3.

I copied that file into the src/assets folder of the Angular application.

The application uses Meta's Llama 3.2 1B model for the SQL generation part. This is the smallest version of the Llama 3.2 models, with one billion parameters, which makes it suitable for running on an edge device like a browser.

The model I'm using in this demo is available on Hugging Face: onnx-community/Llama-3.2-1B-Instruct-q4f16.

It's the model in ONNX format and quantized to make it even smaller. Quantization is a technique to reduce the precision of the model weights,

which can significantly decrease memory and computational requirements. This makes it feasible to run in resource-constrained environments like a browser.

Note that you need the ONNX version of a model to run it with Transformers.js. According to the v3 announcement blog post, there are more than 1200 models already available in the ONNX format. If you want to run a model that is not available in ONNX format yet, you can find a Python script in the Transformers.js GitHub repository that converts a PyTorch model to ONNX format. The official documentation has more information about converting models.

Installation ¶

To get started, I installed, besides the usual Ionic/Angular dependencies, the mentioned two libraries:

npm install @huggingface/transformers

npm install @sqlite.org/sqlite-wasm

Both libraries have parts that are written in WebAssembly. To use them, I copy the WebAssembly files into the project

with the following angular.json configuration:

{

"glob": "**/*.wasm",

"input": "./node_modules/@sqlite.org/sqlite-wasm/sqlite-wasm/jswasm",

"output": "./sqlite-wasm"

},

{

"glob": "*",

"input": "./node_modules/@huggingface/transformers/dist",

"output": "./transformers-wasm"

}

Copying the WebAssembly for the Transformers.js library is optional because the library loads the WebAssembly code from a CDN by default. But I like to have all the files in one place for a project, and I don't have to worry about the CDN being down.

Transformers.js can load models directly from Hugging Face, but as with the WebAssembly files, I like to have all the files in the project. So, I copied the files for the model from Hugging Face

into the src/assets folder:

assets

└── onnx-community

└── Llama-3.2-1B-Instruct-q4f16

└── onnx

└── model_q4f16.onnx

├── config.json

├── generation_config.json

├── tokenizer.json

└── tokenizer_config.json

The q4f16 is a quantized version of the model, and it's the smallest variant with a size of around 1 GB. Make sure that the directory structure looks exactly like the one above. The Transformers.js library expects the files in this structure.

Configuration ¶

With the WebAssembly and model files in place, the application configures the sqlite-wasm library using the following code. It only needs to set the path where the WebAssembly code is located:

const sqlite3 = await sqlite3InitModule({

locateFile: (file) => `/sqlite-wasm/${file}`,

print: console.log,

printErr: console.error,

});

To configure Transformers.js, the application runs the following code. It needs to set the path to the WebAssembly files, the root path where the model files are located, and, because all files are inside the project, disable remote loading and only allow local models.

env.localModelPath = '/assets';

env.allowLocalModels = true;

env.allowRemoteModels = false;

env.backends.onnx.wasm!.wasmPaths = 'transformers-wasm/';

This configuration prevents Transformers.js from loading data directly from Hugging Face.

Next, the application creates a new instance of TextGenerationPipeline with the following code.

this.generator = await pipeline('text-generation', 'onnx-community/Llama-3.2-1B-Instruct-q4f16', {

device: 'webgpu',

dtype: 'q4f16',

local_files_only: true,

});

The TextGenerationPipeline is a class from the Transformers.js library that simplifies the usage of the LLM.

With this instance, the application can easily "chat" with the LLM later.

You can find more information about the pipeline API in the official documentation.

The code above also triggers the download of the model files into the browser. Even though this is the smallest variant of the Llama 3.2 model, it's still around 1 GB in size. It is a huge file in the web application world, and, depending on the bandwidth, it can take a while to download. It would be a pain if the user had to wait for the download every time they visit the application. Fortunately, Transformer.js, by default, caches all model files with the Cache API in the browser's cache. So, the download only happens once, and the application loads the model from the cache the next time. Be aware that the cache is limited in size, and the browser can delete it at any time. So, it might be possible that some users will always have to download the model files. Take this into consideration when you plan to use large language models in a web application.

Running ¶

After a few tries, I ended up with the following prompt template:

readonly #prompt_template = `

You are given a database schema and a question.

Based on the schema, you need to generate a valid SQL SELECT query for sqlite that answers the question.

Schema:

CREATE TABLE countries (

id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL,

name TEXT NOT NULL,

area INTEGER,

area_land INTEGER,

area_water INTEGER,

population INTEGER,

population_growth REAL,

birth_rate REAL,

death_rate REAL,

migration_rate REAL,

flag_description TEXT

)

Based on the above schema, generate a SQL SELECT query for the following question:

Question: {question}

Generate the SQL query based on the schema and the question. The query should always start with "SELECT * FROM countries"

Return only the query. Do not include any comments or extra whitespace.

`;

After the user enters the text, the application creates the prompt for the LLM by taking

the templates and replacing the {question} placeholder with the user's input.

const userPrompt = this.#prompt_template.replace('{question}', this.searchTerm);

Next, the application prepares the messages for the LLM. In this example, there is just one user message. The application then calls

the this.generator function with the message and the maximum number of tokens to generate. The function returns a list of messages, with the

last containing the generated assistant message and the generated SQL statement.

const messages = [

{role: "user", content: userPrompt},

];

const output: any = await this.generator(messages, {max_new_tokens: 200});

this.selectStatement = output[0].generated_text.at(-1).content;

After that, it runs the generated SQL statement on the SQLite database and stores the result in the countries array.

this.db.exec({

sql: this.selectStatement,

rowMode: "object",

callback: (row: any) => {

this.countries.push(row);

}

});

Note that this demo application runs everything in the main thread, blocking the UI while the LLM works. Depending on the device's model size and capabilities, this can take a few seconds to minutes. It would be better to run the LLM in a Web Worker in a separate thread for a real-world application so the UI stays responsive. The transformers.js-examples repository has a few examples that show how to run code in a Web Worker.

Conclusion ¶

Transformers.js is a powerful library that allows you to run large language models in the browser. But we can't just run any huge model in the browser because of the constrained environment. Another challenge is that you can't control the user's browser and hardware. So, you need a way to fall back to alternative methods if the model does not run in the browser, like sending requests to a server and running the model there.

Also, the quality and capabilities of tiny models like the Llama 3.2 1B are nowhere near the quality of a larger model. Additionally, the model in this demo is quantized, which lowers the quality even more. When you play with this demo application, you will see that the generated SQL statements are sometimes valid but not even close to the optimal SQL; sometimes, the SQL statements are invalid, and sometimes, the answer is not even SQL.

Nevertheless, it's impressive that you can run a model with one billion parameters in the browser. In the next blog post, we are going to explore how to improve the quality of the answers by fine-tuning the model.

To learn more about Transformers.js, check out the documentation. The library's source code is available on GitHub. You can find many examples in the transformers.js-examples. It is worth noting that Transformers.js not only supports text generation but also can be used for many other tasks like text and image classification, speech recognition, embeddings, and more.