When you create a website or web application and need to store information on the client for performance reasons, or because the site should work offline, you can choose between two built-in options: Web Storage and IndexedDB.

Web Storage consists of the sessionStorage and localStorage objects. The browser destroys data stored in sessionStorage when the user closes the tab or browser, while localStorage survives a browser restart. Web Storage only supports strings as keys and values. You can still save arrays and objects into Web Storage by serializing them first (for example, with JSON.stringify).

Web Storage is a straightforward API to use, but when you have to store a lot of data, or the application needs to query the data in different ways, IndexedDB might be the more suitable database. In the following post, we're going to take a closer look at IndexedDB.

Browser support ¶

The W3C issued the final recommendation for IndexedDB in January 2015, and browser support has significantly improved since then. While Safari 8 had a buggy implementation that made IndexedDB unreliable, modern browsers provide excellent support. The main exception is Internet Explorer, which has an incomplete implementation lacking features like compound keys and multiEntry indexes.

Visit Can I use to see the current browser support for IndexedDB.

There is a polyfill for IndexedDB when your application needs to support older browsers: IndexedDBShim. It brings support for compound keys to Internet Explorer as well. When you create Cordova applications and have to support older platforms, this plugin could be useful: cordova-plugin-indexedDB.

When you are only interested in creating websites and progressive web applications for mobile devices, the browser support for IndexedDB looks great. Both Chrome and Safari have a complete implementation of IndexedDB version 1 and already support the upcoming version 2 of the standard. On the desktop, you have Chrome, Safari, Firefox, and Opera that support version 1 and version 2 of the specification.

See the current browser support for IndexedDB version 2 on Can I use....

If you are already familiar with version 1, the following article describes all the new features in version 2:

https://hacks.mozilla.org/2016/10/whats-new-in-indexeddb-2-0/

We're going to see a few of these features in action in the following examples.

What is IndexedDB ¶

IndexedDB is a powerful browser-based database capable of storing significant amounts of structured data. It supports indexes similar to SQL databases, enabling efficient data querying.

Key features of IndexedDB include:

- Flexibility: Can store nearly any type of data.

- Security: Protected by the same-origin policy (like Web Storage).

- Object-oriented: Stores JavaScript objects rather than fixed-column tables.

- Schema-based: Requires defining database structure before storing data.

The same-origin policy defines security boundaries based on the scheme (http/https), host, and port of a URL. For example, a website from http://www.bar.com cannot access a database created by http://www.foo.com.

Unlike SQL databases that use fixed-column tables, IndexedDB is object-oriented. It stores JavaScript objects mapped to primary keys, supporting native JavaScript types and complex objects.

Before storing data, applications must define the database schema by creating databases and object stores. Each object store:

- Must have a unique name within its database.

- Requires a primary key.

- Can optionally have one or more indexes.

The object store persists records that are key-value pairs. The primary key can be of type string, date, float, binary blob, and array. A value can be anything that JavaScript supports: boolean, number, string, date, object, array, regexp, undefined, null, ArrayBuffer, typed array objects, and DataView.

Records within an object store are sorted according to the primary key in ascending order. Each object store can hold arbitrary objects, but most applications group similar objects in one store (for example, one store for users, one for tasks, one for notes, and so on). An object store is like a table in a SQL database or a collection in MongoDB.

Web applications are not limited to one database; they can create as many databases as they want, and each database can hold multiple object stores.

IndexedDB is a transactional database, and every operation (read, create, update, delete) needs to be executed in a transaction. When something goes wrong, IndexedDB automatically rolls back the changes and ensures the data integrity of the database.

Most methods of the IndexedDB API are asynchronous. Because the standard was defined before the age of Promises, it uses DOM events that the application needs to listen to.

Data in an IndexedDB database is persistent and does survive a browser restart, but there are eviction policies in place that allow the browser to delete data when it runs out of space. Here is a description of how modern browsers enforce these limits: https://developer.mozilla.org/en-US/docs/Web/API/Storage_API/Storage_quotas_and_eviction_criteria

An experienced user can delete the database by opening the browser developer tools and manually deleting it. For these reasons, applications need to be aware of and handle the situation where the database could be gone the next time they run.

In the future, you should be able to use the StorageManager Estimation API that tells the application if there is enough room to store the data. It's an experimental API and only available in the latest developer build of Chrome and Firefox (September 2017).

Here are a few links that provide more detailed information about IndexedDB:

Basic programming model ¶

Applications using IndexedDB typically follow this workflow:

-

Database Initialization

- Create and open a database.

- Define the schema by creating object stores and indexes.

-

Data Operations

- Start transactions.

- Execute database operations (CRUD).

- Register event listeners to handle operation completion.

-

Result Processing

- Handle successful operations.

- Process returned data.

- Handle errors and edge cases.

You open a database with the open() method from the global indexedDB object.

const openRequest = indexedDB.open('TestDB', 1);

Each database has a name that identifies it within the origin. The name can be any string. The second parameter specifies the current version of the database. When you omit the second parameter, the method uses 1 as the default. To delete a database, you can call the deleteDatabase() method:

indexedDB.deleteDatabase('TestDB');

open() is an asynchronous operation and emits three events: success, error, and upgradeneeded.

If the database does not exist, open() creates it and triggers the upgradeneeded event. If the database already exists, but the stored version number is less than the second parameter of the open() method, the upgradeneeded event is emitted.

IndexedDB runs the upgradeneeded handler inside a transaction. IndexedDB automatically starts and commits this transaction. When the handler throws an error, IndexedDB aborts the upgrade transaction and rolls back the changes.

When the upgradeneeded handler completes successfully, the success event is triggered.

When the database exists, and the version number of the open call matches the current version of the stored database, only the success event is emitted.

The version number must be a positive long number. The numbers don't need to be in consecutive order but must be in ascending order. Most likely, you use an order like 1, 2, 3, 4, but 10, 20, 30, 40 works as well.

When an application tries to open a database with a requested version number less than the current database version, IndexedDB throws an error.

Because you can only change the database schema in the upgradeneeded event handler, you need to trigger this event by increasing the version number every time you have to make changes to the structure of the database, like creating, deleting, and renaming object stores and indexes.

Each asynchronous method of IndexedDB returns a request object. This object receives the success and error events and has onsuccess and onerror properties where your application can attach a handler. Alternatively, an application can use addEventListener and removeEventListener to manage handler methods. The request object also has readyState, result, and errorCode properties that give the application access to the current state of the request. The result property holds the result of the operation. For example, for the open operation, it references the database object, which is the main entry point for the following operations.

openRequest.onerror = event => {

// handle error

console.log('error', event);

};

openRequest.onupgradeneeded = event => {

console.log('upgradeneeded');

const db = event.target.result;

};

let db;

openRequest.onsuccess = event => {

console.log('success');

db = event.target.result;

};

Or with addEventListener:

openRequest.addEventListener('error', event => {

//handle error

console.log('error', event);

});

openRequest.addEventListener('upgradeneeded', event => {

console.log(event);

console.log('upgradeneeded');

});

let db;

openRequest.addEventListener('success', event => {

console.log('success');

db = event.target.result;

});

When you execute this code for the first time in a browser, you see two console log entries: "upgradeneeded" and "success". But when you refresh the browser and execute the program a second time, you just see "success". When you increase the version number parameter in the open call and refresh the browser, you see both strings again.

Error events bubble, like DOM events. First, they try to call the error handler on the request object, then on the transaction, and finally on the database. Therefore, you could install a global error handler on the database object.

db.onerror = event => {

console.log(event);

};

Success events do not bubble up.

To store data, an application needs to create an object store first. As mentioned above, applications can only do that in the upgradeneeded event listener. In this example, the application creates a store with the name users, which has a primary key that references the field userName in the user object.

openRequest.onupgradeneeded = event => {

console.log('upgradeneeded');

const db = event.target.result;

db.createObjectStore('users', { keyPath: "userName" });

};

After the onupgradeneeded handler finishes successfully, the success event handler is called. Before the application can insert and read data, it needs to start a transaction. The following example inserts two user objects with the objectStore.add() and objectStore.put() methods. add() is an insert-only operation and throws an error when the primary key already exists. put() is either an insert when the primary key does not exist or an update when the record with that primary key exists.

objectStore.get() can fetch a record with the primary key. objectStore.getAll() returns all records of the store. The objectStore.delete() method deletes records with the primary key, and objectStore.count() returns the number of records in that store.

openRequest.onsuccess = event => {

console.log('success');

const db = event.target.result;

db.onerror = event => {

console.log(event);

};

const transaction = db.transaction('users', 'readwrite');

const store = transaction.objectStore('users');

store.add({ userName: 'jd', name: 'John', role: 'admin' }); // insert

store.put({ userName: 'ja', name: 'Jason', role: 'operator' }); // insert

store.put({ userName: 'jd', name: 'Jason', role: 'operator' }); // update

store.get('jd').onsuccess = e => console.log('jd: ', e.target.result);

store.getAll().onsuccess = e => console.log(e.target.result);

store.delete('jd');

store.getAll().onsuccess = e => console.log(e.target.result);

store.count().onsuccess = e => console.log(`number of records: ${e.target.result}`);

};

All these operations need to run inside a transaction and return a request object where the application can listen for the success and error events. All operations run asynchronously but are executed in the order in which they were made, and the results are returned in the same order.

IndexedDB automatically commits transactions. There is no method to do that manually. But an application may abort a transaction at any time with the transaction.abort() method. This method rolls back all changes made during the transaction.

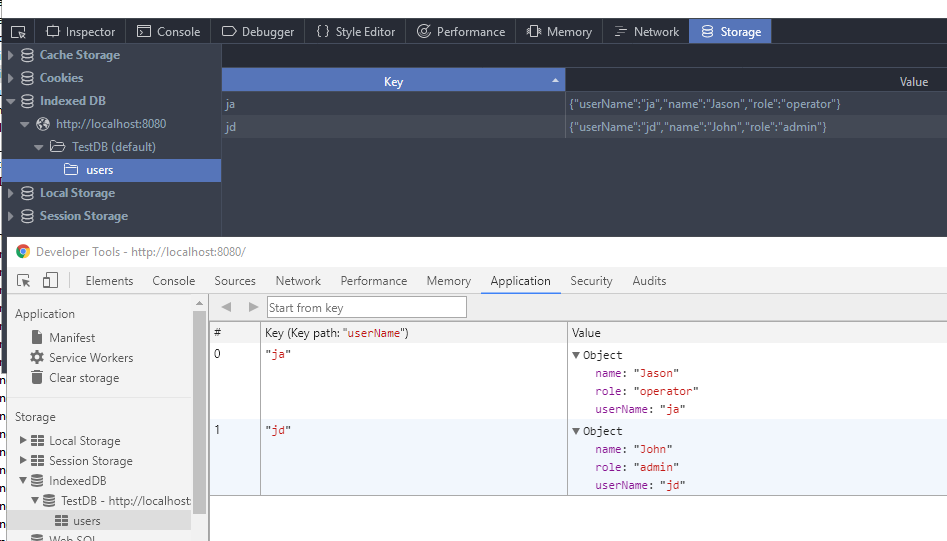

Browsers allow you to see and modify IndexedDB databases in the Developer Tools. In Chrome, you find the databases in the Application tab. Firefox lists the databases in the Storage tab.

Primary Keys ¶

Primary keys are essential for uniquely identifying records in an object store. IndexedDB supports two main types of keys:

-

In-line Keys

- Stored as part of the object.

- Created using the

keyPathoption. - Can only store JavaScript objects as values.

- Example:

{ id: 1, name: "John" }whereidis thekeyPath.

-

Out-of-line Keys

- Stored separately from the object.

- Can store both JavaScript objects and primitive values.

- More flexible for simple key-value storage.

Both key types can be either:

- Manually provided by the application.

- Auto-generated by the database.

This gives developers four primary key configuration options to choose from based on their specific needs.

Let's see some examples for each of these types.

Out-of-line ¶

To create an out-of-line primary key, you omit the second parameter of the db.createObjectStore() method. The following example maps a number to a string (1->"one", 2->"two", ...). To insert a record, you can choose between objectStore.add() and objectStore.put(). Because the primary key is not auto-generated, the code has to provide it as the second parameter of the method call. add() can only insert records and throws an error when the primary key already exists. put() is either an insert or update operation.

const openRequest = indexedDB.open("keys", 1);

openRequest.onupgradeneeded = e => {

const db = e.target.result;

db.createObjectStore('simple');

}

openRequest.onsuccess = e => {

const db = e.target.result;

const transaction = db.transaction('simple', 'readwrite');

const simpleStore = transaction.objectStore('simple');

simpleStore.add('one', 1); // insert

simpleStore.add('two', 2); // insert

simpleStore.put('three', 3); // insert

simpleStore.put('Three', 3); // update

simpleStore.get(3).onsuccess = event => console.log(event.target.result); //Three

}

Out-of-line auto generated ¶

To create an auto-increment primary key, you provide an object with the autoIncrement field set to true as the second parameter of the db.createObjectStore(). IndexedDB uses a key generator that produces consecutive integer values starting with 1. The key generator does not reset when the application deletes records. With an autogenerated key, there is no longer a need to provide a second parameter to the objectStore.add() and objectStore.put() methods; both behave the same and insert the record. The following code inserts three records, and the database generates the keys 1, 2, and 3. When you run this code a second time, the object store holds six records with the keys 1 to 6.

const openRequest = indexedDB.open("keys", 1);

openRequest.onupgradeneeded = e => {

const db = e.target.result;

db.createObjectStore('simple', { autoIncrement: true });

}

openRequest.onsuccess = e => {

const db = e.target.result;

const transaction = db.transaction('simple', 'readwrite');

const simpleStore = transaction.objectStore('simple');

simpleStore.add('one'); // insert

simpleStore.add('two'); // insert

simpleStore.put('three'); // insert

simpleStore.get(3).onsuccess = event => console.log(event.target.result); // three

}

You can still provide a value as the second parameter. In that case, IndexedDB does not auto-generate the primary key for this particular record. Note that add() fails when you try to insert a key that already exists, and put() updates the record. With a new database and object store, the following code assigns 1 to the first record, 10 to the second, and 11 to the third. When you try to run this code a second time, it fails because the primary key 10 already exists.

simpleStore.add('one');

simpleStore.add('two', 10);

simpleStore.put('three');

In-line ¶

To create an object store with an in-line primary key, you need to provide the keyPath configuration option as the second parameter to the db.createObjectStore() method. As usual, objectStore.add() is an insert-only operation and throws an error when the primary key already exists, and objectStore.put() is either an insert or an update operation.

const openRequest = indexedDB.open("keys", 1);

openRequest.onupgradeneeded = e => {

const db = e.target.result;

db.createObjectStore('objects', { keyPath: 'id' });

}

openRequest.onsuccess = e => {

const db = e.target.result;

const transaction = db.transaction('objects', 'readwrite');

const objectStore = transaction.objectStore('objects');

objectStore.add({id: 'one', data: 1});

objectStore.add({id: 'two', data: 2});

objectStore.put({id: 'three', data: 3});

objectStore.put({id: 'three', data: 33});

objectStore.get('three').onsuccess = event => console.log(event.target.result);

//{id: 'three', data: 33}

}

Because the primary key is not auto-generated, the object must contain the field id. The following put statement throws an exception:

objectStore.put({data: 44});

The keyPath can reference any field in the object. It also supports fields in embedded objects with the dot notation.

db.createObjectStore('objects', { keyPath: 'pk.id' });

objectStore.add({data: 1, pk: {id: 1, ts: 1928198298}});

In-line auto generated ¶

To create this kind of primary key, you specify both keyPath and autoIncrement in the configuration object of the db.createObjectStore() method. IndexedDB then installs a key generator for this object store that creates consecutive integer values starting with 1. The property that holds the key should not be present in the object that you want to store. The database automatically adds the property to the object when the insert is successful.

const openRequest = indexedDB.open("keys", 1);

openRequest.onupgradeneeded = e => {

const db = e.target.result;

db.createObjectStore('objects', { keyPath: 'userId', autoIncrement: true });

}

openRequest.onsuccess = e => {

const db = e.target.result;

const transaction = db.transaction('objects', 'readwrite');

const objectStore = transaction.objectStore('objects');

objectStore.add({data: 1}); // insert

objectStore.add({data: 2}); // insert

objectStore.put({data: 3}); // insert

objectStore.get(3).onsuccess = event => console.log(event.target.result);

//{data: 3, userId: 3}

}

When you use inline-keys, you cannot provide the second parameter to the add() and put() method. This code throws an exception:

objectStore.add({data: 2}, 20);

But you can save objects that already contain the property referenced in the keyPath. The following code inserts the records with the primary keys 1, 20, 21, and 40. add() fails when the key already exists, and put() updates the record.

objectStore.add({data: 1});

objectStore.add({userId: 20, data: 2});

objectStore.put({data: 3});

objectStore.put({userId: 40, data: 40});

IndexedDB supports composite or array keys; a key comprises two or more fields. This could be useful when you have an object, but none of the properties alone is unique. This is not a primary key the database can auto-generate; the application has to provide the values.

To create such a key, you need to specify the fields in an array.

db.createObjectStore('objects', { keyPath: ['id', 'data'] });

and then store the object as usual

objectStore.add({id: 'one', data: 1});

To access the record with the primary key, you need to provide an array as well. Make sure that the values are in the same order as specified in the keyPath definition.

objectStore.get(['one', 1]).onsuccess = event => console.log(event.target.result);

Migration ¶

Over the lifespan of an application, requirements change and affect the structure of the database. With IndexedDB, an application can only change the structure of a database in the upgradeneeded event handler. This handler is triggered every time the version number of the db.open() method is increased and is greater than the current version number of the database. We have already seen this behavior in action in the first example. In this section, we look at an example with multiple versions and how we can organize the migration steps.

The initial version of our example application stores contact objects that look like this:

{

name: 'John, Doe',

city: 'London',

email: 'john@email.com',

gender: 'M'

}

Version 1 ¶

For this application, we create a database with the name MyApp, an initial version 1, and an object store contacts with an auto-increment primary key mapped to the id field of the contact object. And we create indexes for the name and email fields. We know that, within the scope of our application, an email can only be referenced in one contact and is therefore unique.

const openRequest = indexedDB.open("MyApp", 1);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

const store = db.createObjectStore('contacts', { keyPath: 'id', autoIncrement: true });

store.createIndex('nameIx', 'name');

store.createIndex('emailIx', 'email', { unique: true});

};

Version 2 ¶

Over time, our application grows, and we add a new function to store todo lists. For that, we need a new object-store. To trigger the upgrade, we increase the version number to 2. We cannot simply add one db.createObjectStore() statement to the existing source code because there are users with a version 1 database that contains the contacts object store, and IndexedDB throws an error when the application calls createObjectStore with a name that already exists. And we cannot simply delete the code from version 1 and only add the db.createObjectStore() statement for the new store because there are users that open our web application for the first time and don't have any database yet.

One way to solve that is to differentiate first-time users and recurring users. Fortunately, the upgradeneeded event object contains a property oldVersion. For new users, this property has the value 0, and for recurring users, it is 1. The code checks if the version is 0 and creates the contacts object-store. When the database is at version 1, it only has to create the new store.

const openRequest = indexedDB.open("MyApp", 2);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

if (event.oldVersion === 0) {

let store = db.createObjectStore('contacts', { keyPath: 'id', autoIncrement: true });

store.createIndex('nameIx', 'name');

store.createIndex('emailIx', 'email', { unique: true});

}

let store = db.createObjectStore('todos', { keyPath: 'id' });

store.createIndex('priorityIx', 'priority');

};

Another way to solve the problem is by checking if the object store already exists. The database has a read-only property objectStoreNames that contains the names of all object stores currently stored in the database.

const openRequest = indexedDB.open("MyApp", 2);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

if (!db.objectStoreNames.contains('contacts')) {

let store = db.createObjectStore('contacts', { keyPath: 'id', autoIncrement: true });

store.createIndex('nameIx', 'name');

store.createIndex('emailIx', 'email', { unique: true});

}

let store = db.createObjectStore('todos', { keyPath: 'id' });

store.createIndex('priorityIx', 'priority');

};

Version 3 ¶

We continue working on the application and doing some refactoring. We want to change the names from the object stores to singular because we no longer like the plural names, and we want to change the postfix for the indexes from Ix to Index.

We now have to support 3 different cases: new users, users with version 1, and users with a version 2 database. Here, we do that by checking if the old object store exists. If not, we create stores with new names. If the old store exists, we rename it. To rename a store, we need to get a reference to it. This can be done by calling the objectStore() method on the transaction object (event.target.transaction). Then we can rename the store by assigning a new value to the name property. To rename the index, we call the index() method from the object store object and assign a new name to the name property. Note that renaming is a feature from the IndexedDB version 2 standard.

const openRequest = indexedDB.open("MyApp", 3);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

if (!db.objectStoreNames.contains('contacts')) {

const store = db.createObjectStore('contact', { keyPath: 'id', autoIncrement: true });

store.createIndex('nameIndex', 'name');

store.createIndex('emailIndex', 'email', { unique: true});

} else {

const txn = event.target.transaction;

const store = txn.objectStore('contacts');

store.name = 'contact';

store.index('nameIx').name = 'nameIndex';

store.index('emailIx').name = 'emailIndex';

}

if (!db.objectStoreNames.contains('todos')) {

const store = db.createObjectStore('todo', { keyPath: 'id' });

store.createIndex('priorityIndex', 'priority');

} else {

const txn = event.target.transaction;

const store = txn.objectStore('todos');

store.name = 'todo';

store.index('priorityIx').name = 'priorityIndex';

}

};

Version 4 ¶

In the next update, we not only want to change the structure of the database but also need to migrate some data. In the contact object store, we initially store the name in one field (John, Doe), first name and last name separated with a comma. We realize that this is a mistake because it makes finding contacts very difficult. For that reason, we want to split this field into two fields, create an index for each field, and delete the nameIndex index.

Now we have to manage 4 different cases. We adjust the code from the 3rd upgrade because here, we only have to create the object stores when the store with the old name (contacts) and new name (contact) do not already exist. Then, we delete the index with contactStore.deleteIndex('nameIndex'), iterate over all the contact records with a cursor, split the name field into a firstName and lastName field, delete the name field, update the changed object in the database with cursor.update(), and finally create an index for the two new fields.

const openRequest = indexedDB.open("MyApp", 4);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

if (!db.objectStoreNames.contains('contacts') && !db.objectStoreNames.contains('contact')) {

const store = db.createObjectStore('contact', { keyPath: 'id', autoIncrement: true });

store.createIndex('nameIndex', 'name');

store.createIndex('emailIndex', 'email', { unique: true });

} else if (db.objectStoreNames.contains('contacts')) {

const txn = event.target.transaction;

const store = txn.objectStore('contacts');

store.name = 'contact';

store.index('nameIx').name = 'nameIndex';

store.index('emailIx').name = 'emailIndex';

}

if (!db.objectStoreNames.contains('todos') && !db.objectStoreNames.contains('todo')) {

const store = db.createObjectStore('todo', { keyPath: 'id' });

store.createIndex('priorityIndex', 'priority');

} else if (db.objectStoreNames.contains('todos')) {

const txn = event.target.transaction;

const store = txn.objectStore('todos');

store.name = 'todo';

store.index('priorityIx').name = 'priorityIndex';

}

const txn = event.target.transaction;

const contactStore = txn.objectStore('contact');

contactStore.deleteIndex('nameIndex');

const allrequest = contactStore.openCursor();

allrequest.onsuccess = event => {

const cursor = event.target.result;

if (cursor) {

const contact = cursor.value;

const splitted = contact.name.split(',');

contact.firstName = splitted[0].trim();

contact.lastName = splitted[1].trim();

delete contact.name;

cursor.update(contact);

cursor.continue();

} else {

contactStore.createIndex('firstNameIndex', 'firstName');

contactStore.createIndex('lastNameIndex', 'lastName');

}

};

More notes about migration:

- To delete a store, call the method

db.deleteObjectStore('store_name'). - You can always add, rename, and delete indexes without losing any data.

- The only way to change the primary key of an object store (for example, not generated to auto-generated or a new

keyPath) is by creating a new store, iterating over the old store, and copying each record to the new store.

Transactions ¶

We've seen transactions in action and mentioned that every read and modify operation has to run inside a transaction. To start a transaction, call database.transaction(). This method takes two parameters. The first parameter is mandatory and lists all the object stores you want to access inside the transaction. This is either one string or an array of strings. The optional second parameter specifies if the transaction should open in read-only or read-write mode. When this parameter is omitted, IndexedDB starts a read-only transaction.

const transaction = db.transaction('customers', 'readwrite');

// equivalent to

const transaction = db.transaction(['customers'], 'readwrite');

const transaction = db.transaction('customers', 'readonly');

// equivalent to

const transaction = db.transaction('customers');

// Multiple stores

const tx = db.transaction(['notes', 'users', 'customers'], 'readwrite');

Transactions emit three events: error, abort and complete.

When an error occurs, the error event is triggered and by default aborts the transaction and rolls back all the changes made during the transaction after that the abort event is emitted. You can override this behavior in the error handler by calling event.stopPropagation().

Otherwise, when all pending requests have completed successfully, the transaction is automatically committed, and the complete event is fired.

The read-only property transaction.objectStoreNames contains a list of object store names that are accessible in this transaction.

The transaction.objectStore() method returns a reference to an object-store. This store has to be one of the stores you provided to the db.transaction() method. The transaction throws an error when the application tries to access a store that was not listed.

const tx = db.transaction(['notes', 'users', 'customers'], 'readwrite');

console.log(tx.objectStoreNames);

tx.oncomplete = e => console.log('all done');

tx.onerror = onerror = e => { /* Handle errors */ };

tx.onabort = e => console.log('transaction aborted');

var objectStore = tx.objectStore('users');

objectStore.delete(1);

objectStore.add({....});

// tx.abort();

}

An application may manually abort a transaction with the transaction.abort() method, which rolls back all the changes made during that transaction.

Indexes ¶

Indexes are an important part of IndexedDB, and we have already seen a few examples of the objectStore.createIndex() method. But so far, I haven't discussed what indexes do and why an application needs them.

When the database does not support indexes, the only way to access the records is either with the primary key or by iterating over all the records. Indexes provide an additional performant access path to the records.

To create an index, an application calls the objectStore.createIndex() method. This method takes three methods, though the last parameter is optional. The first parameter defines the name of the index. The second parameter is the keyPath that references a property in the object. Like the primary keys, this can be an array of two and more properties. The last parameter is an object with configuration parameters.

This code creates an index with the name nameIx and references the field name in the value object.

objectStore.createIndex('nameIx', 'name');

Unique indexes do not allow multiple values, similar to the primary key. This adds a constraint to the database. The database throws an exception when the application tries to insert a record that violates the unique constraint.

objectStore.createIndex('emailIndex', 'email', { unique: true});

When a property is an array, and you want IndexedDB to add each of the array elements to the index, you need to set the multiEntry option to true.

objectStore.createIndex('hobbies', 'hobbies', { multiEntry: true});

You should not create an index for each property in your object. Only create indexes for properties you know are going to be accessed in your application. IndexedDB needs to store indexes in internal data structures and have to update them every time an application inserts, updates, and deletes records. You can always add (and remove) indexes in future versions without losing any data.

Let's look at some queries. The following example creates an object store with the name contacts and inserts 10 records.

const openRequest = indexedDB.open("ContactDB", 1);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

const store = db.createObjectStore('contacts', { keyPath: 'id' });

store.createIndex('lastName', 'lastName');

store.createIndex('age', 'age');

store.createIndex('email', 'email', { unique: true });

store.createIndex('hobbies', 'hobbies', { multiEntry: true });

};

openRequest.onsuccess = event => {

const db = event.target.result;

db.onerror = event => {

console.log(event);

};

const transaction = db.transaction('contacts', 'readwrite');

const store = transaction.objectStore('contacts');

store.put({ id: 1, firstName: 'John', lastName: 'Doe', age: 31, email: 'jd@email.com',

hobbies: ['reading', 'drawing', 'painting'] });

store.put({ id: 2, firstName: 'Madge', lastName: 'Frazier', age: 22, email: 'mf@email.com',

hobbies: ['swimming', 'biking', 'jogging', 'yoga'] });

store.put({ id: 3, firstName: 'Green', lastName: 'Stanton', age: 44, email: 'gs@email.com',

hobbies: ['reading', 'writing', 'cooking'] });

store.put({ id: 4, firstName: 'Iva', lastName: 'Shelton', age: 33, email: 'is@email.com',

hobbies: ['yoga', 'cooking', 'painting', 'sewing'] });

store.put({ id: 5, firstName: 'Boyd', lastName: 'Marsh', age: 27, email: 'bm@email.com',

hobbies: ['scuba diving', 'skydiving', 'swimming', 'surfing'] });

store.put({ id: 6, firstName: 'Avis', lastName: 'Hicks', age: 39, email: 'ah@email.com',

hobbies: ['biking', 'reading', 'jogging'] });

store.put({ id: 7, firstName: 'Kim', lastName: 'Hicks', age: 25, email: 'kn@email.com',

hobbies: ['photography', 'canyoning', 'jogging', 'sailing'] });

store.put({ id: 8, firstName: 'Alexis', lastName: 'Hughes', age: 39, email: 'al@email.com',

hobbies: ['reading', 'gardening', 'walking'] });

store.put({ id: 9, firstName: 'Stone', lastName: 'Livingston', age: 33, email: 'sl@email.com',

hobbies: ['jogging', 'biking', 'yoga'] });

store.put({ id: 10, firstName: 'Pam', age: 28, email: 'pr@email.com',

hobbies: ['jogging', 'cooking', 'photography'] });

transaction.oncomplete = e => runQueries(db);

};

After the insert operation completes successfully, the code calls the runQueries method.

As always, read operations have to run inside a transaction; therefore, the application starts a read-only transaction that spans the contacts object-store. Then it gets a reference to the index with the objectStore.index() method.

The index object provides several methods to access the records. Note that all of these methods are asynchronous, and you always have to add a success handler to fetch the result. The property event.target.result holds the result of the query. Here is a basic code template with the index.get() method as example.

function runQueries(db) {

const store = db.transaction('contacts', 'readonly').objectStore('contacts');

const lastNameIndex = store.index('lastName');

const ageIndex = store.index('age');

const emailIndex = store.index('email');

const hobbiesIndex = store.index('hobbies');

lastNameIndex.get('Hicks').onsuccess = e => console.log(e.target.result);

// {id: 6, firstName: "Avis", lastName: "Hicks", age: 39, email: "ah@email.com", …}

}

In the following descriptions, I omit the success handler.

lastNameIndex.getAll() returns all records that are stored in this index. All index methods return the records sorted according to the index property in ascending order. Notice that this particular index only contains 9 of the 10 test records. Record 10 does not have a property lastName and is therefore not included in this index.

/*

{"id":1,"firstName":"John","lastName":"Doe" ...}

{"id":2,"firstName":"Madge","lastName":"Frazier" ...}

{"id":6,"firstName":"Avis","lastName":"Hicks" ...}

{"id":7,"firstName":"Kim","lastName":"Hicks" ...}

{"id":8,"firstName":"Alexis","lastName":"Hughes" ...}

{"id":9,"firstName":"Stone","lastName":"Livingston" ...}

{"id":5,"firstName":"Boyd","lastName":"Marsh" ...}

{"id":4,"firstName":"Iva","lastName":"Shelton" ...}

{"id":3,"firstName":"Green","lastName":"Stanton" ...}

*/

You can provide an index key as the first parameter. In that example getAll() only returns records where the value of the lastName matches exactly the string 'Hicks'.

lastNameIndex.getAll('Hicks')

// {"id":6,"firstName":"Avis","lastName":"Hicks"...}

// {"id":7,"firstName":"Kim","lastName":"Hicks" ....}

The second optional parameter limits the number of records.

lastNameIndex.getAll(null, 2)

// {"id":1,"firstName":"John","lastName":"Doe" ...}

// {"id":2,"firstName":"Madge","lastName":"Frazier" ...}

lastNameIndex.getAll('Hicks', 1)

// {id: 6, firstName: "Avis", lastName: "Hicks" ...}

index.get() returns the first record that matches the provided index key. When the index is non-unique, it can hold multiple records with the same index key. In that case, get() returns the record with the lowest primary key. get() is equivalent to getAll(..., 1).

lastNameIndex.get('Hicks')

// {"id":6,"firstName":"Avis","lastName":"Hicks", .... }

index.get() is especially useful for querying unique indexes.

emailIndex.get('kn@email.com') // {id: 7, firstName: "Kim", email: "kn@email.com", ... }

emailIndex.get('kx@email.com') // undefined

An alternative to get() and getAll() are the methods getKey() and getAllKeys(). They do not return the whole record but only the primary key. If the application only needs the primary key of a record, you should always use these methods because they don't have to deserialize the record, which saves time and memory. getAllKeys() always returns an array and getKey() only returns one value.

lastNameIndex.getAllKeys() // [1, 2, 6, 7, 8, 9, 5, 4, 3]

lastNameIndex.getAllKeys(null, 2) // [1, 2]

lastNameIndex.getAllKeys('Hicks') // [6, 7]

lastNameIndex.getAllKeys('Hicks', 1) // [6]

lastNameIndex.getKey('Hicks') // 6

index.count() returns the number of records that matches the query.

lastNameIndex.count() // 9

lastNameIndex.count('Hicks') // 2

All these methods (except getKey) are also available on the objectStore.

Here you query with the primary key.

store.get(7) // {"id":7,"firstName":"Kim","lastName":"Hicks", ... }

store.count() // 10

Ranges ¶

All query methods mentioned in the previous section do not only support a value as a parameter that has to match exactly the index key but also support an object of type IDBKeyRange. To create a range, you call one of the provided methods of the IDBKeyRange object. By default, the bounds are included, but you can exclude them with the optional boolean parameters.

| Range | Code |

|---|---|

| Index key <= x | IDBKeyRange.upperBound(x) |

| Index key < x | IDBKeyRange.upperBound(x, true) |

| Index key >= y | IDBKeyRange.lowerBound(y) |

| Index key > y | IDBKeyRange.lowerBound(y, true) |

| Index key >= x && <= y | IDBKeyRange.bound(x, y) |

| Index key > x &&< y | IDBKeyRange.bound(x, y, true, true) |

| Index key > x && <= y | IDBKeyRange.bound(x, y, true, false) |

| Index key >= x &&< y | IDBKeyRange.bound(x, y, false, true) |

| Index key === z | IDBKeyRange.only(z) |

The IDBKeyRange object provides the includes() method that tests if a value is inside the range.

const range = IDBKeyRange.bound(1, 10, true, true);

range.includes(5); // true

range.includes(10); //false

range.includes(1); // false

The examples we have seen so far use internally the IDBKeyRange.only range.

These two statements return the same result.

lastNameIndex.count('Hicks') // 2

lastNameIndex.count(IDBKeyRange.only('Hicks')) // 2

Here are a few examples with range queries

ageIndex.getAll(IDBKeyRange.upperBound(25))

// {"id":2,"firstName":"Madge","lastName":"Frazier","age":22 ...}

// {"id":7,"firstName":"Kim","lastName":"Hicks","age":25 ...}

ageIndex.getAll(IDBKeyRange.upperBound(25, true))

// {"id":2,"firstName":"Madge","lastName":"Frazier","age":22 ...

ageIndex.getAll(IDBKeyRange.lowerBound(39))

/*

{"id":6,"firstName":"Avis","lastName":"Hicks","age":39 ...}

{"id":8,"firstName":"Alexis","lastName":"Hughes","age":39 ...}

{"id":3,"firstName":"Green","lastName":"Stanton","age":44 ...}

*/

ageIndex.getAll(IDBKeyRange.lowerBound(39, true))

// {"id":3,"firstName":"Green","lastName":"Stanton","age":44 ...}

ageIndex.getAll(IDBKeyRange.bound(25, 39))

/*

{"id":7,"firstName":"Kim","lastName":"Hicks","age":25 ...}

{"id":5,"firstName":"Boyd","lastName":"Marsh","age":27 ...}

{"id":10,"firstName":"Pam","age":28 ...}

{"id":1,"firstName":"John","lastName":"Doe","age":31 ...}

{"id":4,"firstName":"Iva","lastName":"Shelton","age":33 ...}

{"id":9,"firstName":"Stone","lastName":"Livingston","age":33 ...}

{"id":6,"firstName":"Avis","lastName":"Hicks","age":39 ...}

{"id":8,"firstName":"Alexis","lastName":"Hughes","age":39 ...}

*/

ageIndex.getAllKeys(IDBKeyRange.bound(25, 39, true, true))

// [5, 10, 1, 4, 9]

Ranges are not limited to numbers.

emailIndex.getAll(IDBKeyRange.bound('a', 'c'))

/*

{"id":6,"firstName":"Avis","lastName":"Hicks","age":39,"email":"ah@email.com", ...}

{"id":8,"firstName":"Alexis","lastName":"Hughes","age":39,"email":"al@email.com", ...}

{"id":5,"firstName":"Boyd","lastName":"Marsh","age":27,"email":"bm@email.com", ...}

*/

hobbiesIndex.getAll(IDBKeyRange.bound('c', 'd', false, true)) //All hobbies starting with 'c'

/*

{"id":7,"firstName":"Kim","lastName":"Hicks",...,

"hobbies":["photography","canyoning","jogging","sailing"]}

{"id":3,"firstName":"Green","lastName":"Stanton",...,"hobbies":["reading","writing","cooking"]}

{"id":4,"firstName":"Iva","lastName":"Shelton",...,"hobbies":["yoga","cooking","painting","sewing"]}

{"id":10,"firstName":"Pam",...,"hobbies":["jogging","cooking","photography"]}

*/

Cursors ¶

The query methods we have seen so far return the records in one batch. This could be a problem when you have to process thousands of records. getAll() has to deserialize all the objects at once and store them in memory. For processing many records, it's more memory-efficient to use a cursor that processes the records one by one.

The methods index.openCursor() and index.openKeyCursor() create a cursor. These two methods also exist on the objectStore object. When the application only needs access to the primary key, you should always use the openKeyCursor() method, which does not have to deserialize the object from the database into memory.

Both methods take two optional parameters. The first parameter is either the index key or an IDBKeyRange object.

This works the same as with all the get methods we have seen so far.

The second parameter provides a direction. This is a string constant and supports these four values.

'next': The cursor shows all records and starts at the lower bound and moves upwards in the order of the index key. Default when the parameter is omitted.'nextunique': The cursor starts at the lower bound and moves upwards but only shows unique values. This is useful for non-unique indexes when you only want to see the unique values. When multiple records exist, the cursor only processes the one with the lowest primary key and skips the others.'prev': The cursor shows all records and starts at the upper bound and moves downwards.'prevunique': Like the nextunique direction filters out duplicates but starts at the upper bound and moves downwards.

Working with cursors follows this basic pattern. As soon as the application opens the cursor, the database calls the success handler with the first matched record. In the handler, you have to test if the cursor object is null. The cursor is null when the query does not return any results or when all records were processed.

If the cursor is not null, you process the record. Then you call the cursor.continue() method to move the cursor to the next record. This call triggers the success handler again.

ageIndex.openCursor(IDBKeyRange.lowerBound(30)).onsuccess = e => {

const cursor = event.target.result;

if (cursor) {

console.log(`primary key: ${cursor.primaryKey}, key: ${cursor.key},

value: ${JSON.stringify(cursor.value)}`);

cursor.continue();

} else {

console.log('all records processed');

}

};

The cursor.primaryKey property holds the primary key of the current record, cursor.key holds the index key, in this example the value of the age property and cursor.value points to the current object.

primary key: 1, key: 31, value: {"id":1,"firstName":"John","lastName":"Doe","age":31, ...}

primary key: 4, key: 33, value: {"id":4,"firstName":"Iva","lastName":"Shelton","age":33, ...}

primary key: 9, key: 33, value: {"id":9,"firstName":"Stone","lastName":"Livingston","age":33, ...}

primary key: 6, key: 39, value: {"id":6,"firstName":"Avis","lastName":"Hicks","age":39, ...}

primary key: 8, key: 39, value: {"id":8,"firstName":"Alexis","lastName":"Hughes","age":39, ...}

primary key: 3, key: 44, value: {"id":3,"firstName":"Green","lastName":"Stanton","age":44, ...}

With a key cursor, the property cursor.value is undefined.

ageIndex.openKeyCursor(IDBKeyRange.lowerBound(30)).onsuccess = e => {

const cursor = event.target.result;

if (cursor) {

console.log(`primary key: ${cursor.primaryKey}, key: ${cursor.key},

value: ${JSON.stringify(cursor.value)}`);

cursor.continue();

} else {

console.log('all records processed');

}

};

// primary key: 1, key: 31, value: undefined

// primary key: 4, key: 33, value: undefined

// primary key: 9, key: 33, value: undefined

// primary key: 6, key: 39, value: undefined

// primary key: 8, key: 39, value: undefined

// primary key: 3, key: 44, value: undefined

The direction parameter is useful when the application needs to process the records in reverse order or wants to filter out duplicate values when it loops over a non-unique index.

const hobbies = [];

hobbiesIndex.openKeyCursor(null, 'nextunique').onsuccess = e => {

const cursor = event.target.result;

if (cursor) {

hobbies.push(cursor.key);

cursor.continue();

} else {

console.log(hobbies);

}

};

/*

["biking", "canyoning", "cooking", "drawing", "gardening", "jogging", "painting", "photography",

"reading", "sailing", "scuba diving", "sewing", "skydiving", "surfing", "swimming", "walking",

"writing", "yoga"]

*/

hobbiesIndex.openKeyCursor(null, 'next')

/*

["biking", "biking", "biking", "canyoning", "cooking", "cooking", "cooking", "drawing",

"gardening", "jogging", "jogging", "jogging", "jogging", "jogging", "painting", "painting",

"photography", "photography", "reading", "reading", "reading", "reading", "sailing",

"scuba diving", "sewing", "skydiving", "surfing", "swimming", "swimming", "walking",

"writing", "yoga", "yoga", "yoga"]

*/

hobbiesIndex.openKeyCursor(null, 'prevunique')

/*

["yoga", "writing", "walking", "swimming", "surfing", "skydiving", "sewing", "scuba diving",

"sailing", "reading", "photography", "painting", "jogging", "gardening", "drawing", "cooking",

"canyoning", "biking"]

*/

hobbiesIndex.openKeyCursor(null, 'prev')

/*

["yoga", "yoga", "yoga", "writing", "walking", "swimming", "swimming", "surfing", "skydiving",

"sewing", "scuba diving", "sailing", "reading", "reading", "reading", "reading", "photography",

"photography", "painting", "painting", "jogging", "jogging", "jogging", "jogging", "jogging",

"gardening", "drawing", "cooking", "cooking", "cooking", "canyoning", "biking", "biking", "biking"]

*/

Cursors are not only useful for reading records. They also provide the method cursor.update() for updating and cursor.delete() for deleting the current record.

Examples ¶

Let's look at two examples with bigger data sets and more complex queries.

Pokémon ¶

The first example fetches a JSON file that contains a collection of all Pokémon objects from the game Pokémon GO and inserts them into the database. Then it runs some queries.

You find the dataset here:

https://github.com/Biuni/PokemonGO-Pokedex/blob/master/pokedex.json

Each object already has a unique property (id) that the application defines as the primary key of the object-store. The application queries the type and weaknesses properties and therefore creates indexes for these fields. Both fields are arrays, and to instruct IndexedDB to index each array element individually, the multiEntry option is set to true.

const openRequest = indexedDB.open("PokeDex", 1);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

const store = db.createObjectStore('pokemons', { keyPath: 'id' });

store.createIndex('type', 'type', { multiEntry: true });

store.createIndex('weaknesses', 'weaknesses', { multiEntry: true });

};

After the database and object store are created, the application starts a read-only transaction to count the number of records in the object-store. To save bandwidth, the application fetches the JSON file only once when the object store is empty.

let db;

openRequest.onsuccess = event => {

db = event.target.result;

db.onerror = event => {

console.log(event);

};

db.transaction('pokemons', 'readonly')

.objectStore('pokemons')

.count().onsuccess = e => {

const count = e.target.result;

if (count === 0) {

console.log('fetching and inserting data');

insertData();

} else {

console.log('data exists');

queryData();

}

};

};

The insertData method fetches the JSON file from GitHub and inserts the records into the database.

Here you see an example of how you can wrap the IndexedDB callbacks in a Promise.

The complete event is emitted as soon as all started asynchronous requests (store.put()) in the transaction are finished.

function insertData() {

fetch('https://raw.githubusercontent.com/Biuni/PokemonGO-Pokedex/master/pokedex.json')

.then(response => response.json())

.then(json => {

return new Promise((resolve, reject) => {

const tx = db.transaction('pokemons', 'readwrite');

const store = tx.objectStore('pokemons');

json.pokemon.forEach(p => store.put(p));

tx.oncomplete = e => resolve();

});

})

.then(queryData);

}

The queryData method shows you a few example queries. As usual, to query data, the application has to start a transaction.

store.count() returns the number of records in the store.

getAll() returns all the records that match the index key.

In the first example, these are Pokémon that are of type 'Grass', in the second query all Pokémon that are weak against 'Flying'.

In real-world applications, you often have to combine queries. IndexedDB supports compound indexes that span multiple properties. These are useful for AND combinations.

Unfortunately, the database does not support compound indexes together with the multiEntry option. But it's not that complicated to combine queries by executing multiple queries and then combine the results. The AND example searches for all Pokémon that are of type 'Bug' and are weak against 'Fire'.

The application starts both queries with index.getAllKeys(), wraps each of the callbacks in a Promise and waits with Promise.all for both results.

Then the code creates an intersection of the two result arrays, iterates over it, and fetches each Pokémon individually with store.get().

The last example looks for all Pokémon that are weak against 'Ice' OR 'Flying'. This looks very similar to the previous query, but instead of an intersection, it creates a union of the two result arrays. Set helps to filter out duplicate primary keys.

function queryData() {

const store = db.transaction('pokemons', 'readonly').objectStore('pokemons');

const typeIndex = store.index('type');

const weaknessesIndex = store.index('weaknesses');

// Count all Pokémons

store.count().onsuccess = e => console.log(`Number of Pokemons: ${e.target.result}`);

// All grass type Pokémons

typeIndex.getAll('Grass').onsuccess = e => e.target.result.forEach(printPokemon);

// Pokémons weak against flying

weaknessesIndex.getAll('Flying').onsuccess = e => e.target.result.forEach(printPokemon);

// AND query

const bugQuery = new Promise(resolve => typeIndex.getAllKeys('Bug')

.onsuccess = e => resolve(e.target.result));

const fireQuery = new Promise(resolve => weaknessesIndex.getAllKeys('Fire')

.onsuccess = e => resolve(e.target.result));

Promise.all([bugQuery, fireQuery]).then(results => {

const intersection = results[0].filter(id => results[1].includes(id));

intersection.forEach(id => {

store.get(id).onsuccess = e => printPokemon(e.target.result);

});

});

// OR query

const iceQuery = new Promise(resolve => weaknessesIndex.getAllKeys('Ice')

.onsuccess = e => resolve(e.target.result));

const flyingQuery = new Promise(resolve => weaknessesIndex.getAllKeys('Flying')

.onsuccess = e => resolve(e.target.result));

Promise.all([iceQuery, flyingQuery]).then(results => {

const union = new Set([...results[0], ...results[1]]);

union.forEach(id => {

store.get(id).onsuccess = e => printPokemon(e.target.result);

});

});

}

function printPokemon(p) {

console.log(`ID: ${p.id} - Name: ${p.name} - Type: ${p.type.join(', ')}

- Weakness: ${p.weaknesses.join(', ')}`);

}

Earthquakes ¶

This example imports a larger dataset. A list of earthquakes that happened in the past 30 days.

At the time of writing this post (September 2017), the file contains over 9500 earthquakes.

The application follows the same pattern as the previous example and imports the data only if the database is empty.

This saves some bandwidth because the JSON file is quite big. As usual, the applications create the object store and indexes in the upgradeneeded event handler.

All the properties that the application is interested in are inside the embedded properties object.

To reference them, the application uses the dot notation. The magTsunami index is a compound index that spans two properties.

const openRequest = indexedDB.open("Earthquake", 1);

openRequest.onupgradeneeded = event => {

const db = event.target.result;

const store = db.createObjectStore('earthquakes', { keyPath: 'id' });

store.createIndex('mag', 'properties.mag');

store.createIndex('time', 'properties.time');

store.createIndex('magTsunami', ['properties.tsunami', 'properties.mag']);

};

let db;

openRequest.onsuccess = event => {

db = event.target.result;

db.onerror = event => {

console.log(event);

};

db.transaction('earthquakes', 'readonly')

.objectStore('earthquakes')

.count().onsuccess = e => {

const count = e.target.result;

if (count === 0) {

console.log('fetching and inserting data');

insertData();

} else {

console.log('data exists');

queryData();

}

};

};

function insertData() {

fetch('https://earthquake.usgs.gov/earthquakes/feed/v1.0/summary/all_month.geojson')

.then(response => response.json())

.then(json => {

return new Promise((resolve, reject) => {

const tx = db.transaction('earthquakes', 'readwrite');

const store = tx.objectStore('earthquakes');

json.features.forEach(p => store.put(p));

tx.oncomplete = e => resolve();

});

})

.then(queryData);

}

The program executes five queries. First, it counts all the records in the object store and prints out the number.

Then it lists all earthquakes with a magnitude of 6 (inclusive) and higher.

magIndex.getAll() with the IDBKeyRange.lowerBound range returns all the earthquakes in ascending order of the magnitude.

The next query is similar and lists all earthquakes from the past 6 hours. The earthquake dataset stores the time in milliseconds since 1970-1-1.

Next, I wanted to fetch the earthquake with the highest magnitude.

The code could very easily access the earthquake with the lowest magnitude with magIndex.getAll(null, 1). This works because the mag index is internally sorted by magnitude in ascending order. But getAll() does not give us the possibility to fetch records in descending order. For that reason, the application uses a cursor with the direction of prev. Because the application wants to stop the cursor after the first returned record, it does NOT call cursor.continue().

The last example accesses the compound index magTsunami and queries for all earthquakes that happened in or near an ocean and probably cause a tsunami (tsunami === 1) and have a magnitude between 5 (inclusive) and 5.5 (exclusive). Bounds are by default inclusive, and you need to provide the optional boolean parameters to exclude them.

function queryData() {

const store = db.transaction('earthquakes', 'readonly').objectStore('earthquakes');

const magIndex = store.index('mag');

const timeIndex = store.index('time');

const magTsunami = store.index('magTsunami');

// Count all records

store.count().onsuccess = e => console.log(`Number of records: ${e.target.result}`);

// List all earthquakes with a magnitude of 6 and higher

magIndex.getAll(IDBKeyRange.lowerBound(6)).onsuccess = e => e.target.result.forEach(printEarthquake);

// List all earthquakes in the last 6 hours

const sixHoursAgoInMillis = Date.now() - (6*60*60*1000);

timeIndex.getAll(IDBKeyRange.lowerBound(sixHoursAgoInMillis))

.onsuccess = e => e.target.result.forEach(printEarthquake);

// List earthquake with the highest magnitude

magIndex.openCursor(null, 'prev').onsuccess = e => {

const cursor = e.target.result;

if (cursor) {

printEarthquake(cursor.value);

}

else {

console.log('no data available');

}

};

// List all earthquakes that probably causes a tsunami and have a magnitude

// between 5 (inclusive) and 5.5 (exclusive)

magTsunami.getAll(IDBKeyRange.bound([1, 5], [1, 5.5], false, true))

.onsuccess = e => e.target.result.forEach(printEarthquake);

}

function printEarthquake(e) {

console.log(`Time: ${new Date(e.properties.time)} - Magnitude: ${e.properties.mag}

- ${e.properties.place}`);

}